Numbers in javascript.

Hi, today let's dive into how numbers works in javascript.

As we can see In javascript every numbers are 64-bit float following the IEEE 754-2008 format, in this post we will see how IEEE 754-2008 format work.

The designer of the IEEE-754 format choose to represent numbers in a scientifique notation, so a number like 340.15 become 3.4015 * 10^2. Since we are on a computer we must deal with binary thus this number must be represented in base 2.

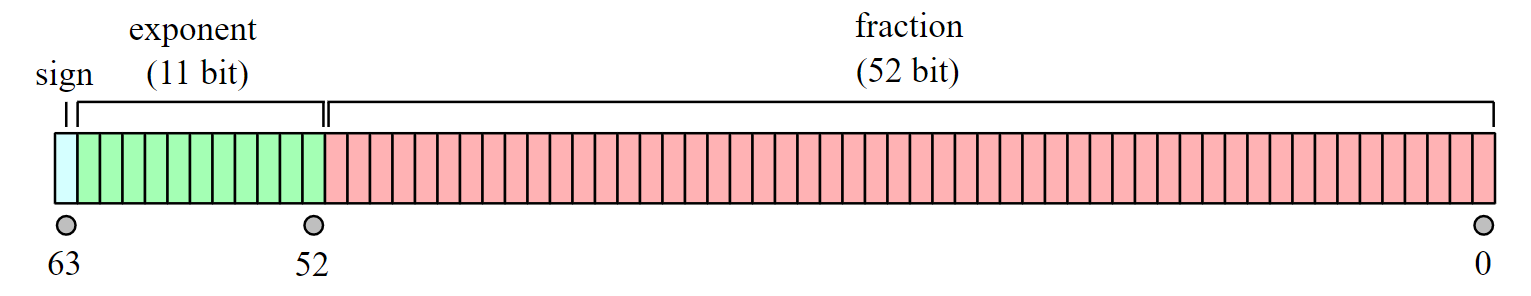

64-bit float are represented in binary as such:

they are composed of 3 part:

1 bit for the sign wich give the sign of the number, 0 for positive, 1 for negative.

11 bit for the biased exponent. (the bias for 64 float is 1023)[1]

52 bit for the mantissa.[2]

So how our number 340.15 fit in those bit?

From number to binary:

First let's convert 340.15 to a base 2 format.

integer: 340

340 = 170 * 2 + 0

170 = 85 * 2 + 0

85 = 42 * 2 + 1

42 = 21 * 2 + 0

21 = 10 * 2 + 1

10 = 5 * 2 + 0

5 = 2 * 2 + 1

2 = 1 * 2 + 0

1 = 0 * 2 + 1

340 in binary => 001010101

decimal: 0.15

0.15 * 2 = 0.3

0.3 * 2 = 0.6

0.6 * 2 = 1.2

0.2 * 2 = 0.4

0.4 * 2 = 0.8

0.8 * 2 = 1.6

0.6 * 2 = 1.2

0.2 * 2 = 0.4

0.4 * 2 = 0.8

.

.

.

0.15 in binary => 00100110011001100110011001100....

Thus in binary 340.15 is 001010101.0010011001100110011001100..... since it need be write with a scientifique notation we have 1.0101010010011001100110011001100..... * 108 So the exponent is 8 + bias (1023) = 1031 in binary it is: 10000000111

We have:

Sign: 0

Exponent: 10000000111

Mantissa: 0101010000100110011001100110011001100110011001100110 (note that the leading 1 is implicite, except for denormal number explaination for denormal notation is further down this article)

Full binary notation: 0100000001110101010000100110011001100110011001100110011001100110

From binary to number:

To convert it back to our number it's simple just apply this formula: -1sign * 2exp - biais * mantissa.

Sign: 0

Exponent: 10000000111 = 1 * 20 + 1 *21 + 1 * 22 + ..... + 0 * 29 + 1 * 210 - 1023 = 8

Mantissa: 0101010000100110011001100110011001100110011001100110 = 1 + 0*2-1 + 1 * 2-2 + 0 * 2-3 + .... + 0 * 2-52 = 1.3287109375

Number: -10 * 28 * 1.3287109375 = 340.15

Zero and denormal numbers:

Zero is represented specially:

sign = 0 for positive zero, 1 for negative zero

biased exponent = 0

mantissa = 0

Denormalized numbers:

The number representations described above are called normalized, meaning that the implicit leading binary digit is a 1. To reduce the loss of precision when an underflow occurs, IEEE 754 includes the ability to represent fractions smaller than are possible in the normalized representation, by making the implicit leading digit a 0. Such numbers are called denormal. They don't include as many significant digits as a normalized number, but they enable a gradual loss of precision when the result of an arithmetic operation is not exactly zero but is too close to zero to be represented by a normalized number.

Visual representation:

Along with this article, I made a visual representation of the IEEE-754 64-bit floating point format, you can find it here: https://lockee14.github.io/64_bit_IEEE-754_convertor/

The Github repo for this project is here: https://github.com/lockee14/64_bit_IEEE-754_convertor

Notes:

Resources:

- Write Great Code: Volume 1: Understanding the Machine chapter 4 Floating-Point Representation

- https://www.ecma-international.org/ecma-262/10.0/index.html

- http://www.dsc.ufcg.edu.br/~cnum/modulos/Modulo2/IEEE754_2008.pdf

- https://en.wikipedia.org/wiki/IEEE_754

- https://www.h-schmidt.net/FloatConverter/IEEE754.html

Archives:

-

-

- Number in javascript - 10/14

-

- Double bitwise not in javascript - 07/28

- Why this blog - 07/25

-